Experts discuss AI, data and human rights at U of T event

Published: February 25, 2025

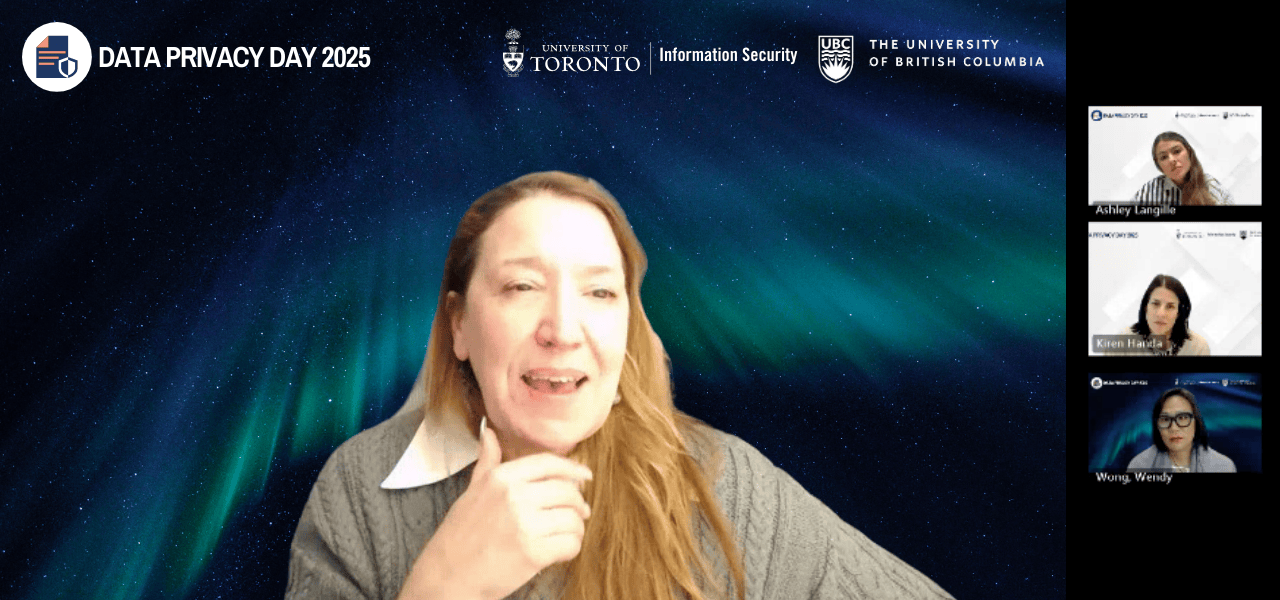

To celebrate Data Privacy Day on Jan. 28, the University of Toronto’s Acting Chief Information Officer Dr. Donna K. Kidwell hosted a lively discussion with University of British Columbia political science professor Dr. Wendy H. Wong, U of T’s Executive Director of Institutional Research and Data Governance Kiren Handa, and U of T’s Information Privacy Analyst in Information Security Ashley Langille.

With a special focus on Dr. Wong’s book, We, the Data: Human Rights in the Digital Age, the virtual conversation, attended by over 140 participants, explored privacy-by-design strategies, ethical data practices and the future of secure technology.

Breaking artificial intelligence into solvable parts

Dr. Wong began by addressing AI’s growing role in society and how breaking it down into its core elements—compute, algorithms and data—can make it more manageable to understand. She emphasized that policy-makers should focus on data rather than algorithms, as data directly affects people’s lives.

Wendy expanded on this idea, emphasizing how data is inherently co-created and therefore harder to control. “The truth about data is that it’s not a private thing. It’s always going to be co-created,” says Wendy. “Many of us are tracking our daily activities and understand ourselves so much better than we used to, but tech companies and governments also have access to these data. What kinds of protections do we need as a result?”

Dr. Kidwell shared the example of zero-knowledge authentication. “One experiment I’ve been watching in California, which is about nine months in, is a digital ID. You can go into a convenience store and buy liquor, flash your ID, and the system confirms you’re old enough—without revealing your actual birthdate,” outlines Donna. “These zero-knowledge moments, where a verified source confirms a fact without exposing unnecessary details, are fascinating. I’m really interested in how we traverse it.”

Ashley noted that by focusing on data governance, individuals and organizations can push for stronger privacy protections without getting lost in the complexity of algorithmic design.

AI’s impact on marginalized groups

Dr. Wong cited research by Petra Molnar, a lawyer and anthropologist specializing in migration and human rights, on the use of facial recognition and surveillance technologies on refugees and how policing algorithms amplify systemic biases. She stressed that marginalized groups in the analog world continue to be marginalized in digital spaces.

Molnar’s research highlights how security and border control technologies often enable excessive surveillance, privacy violations and discrimination against displaced and racialized populations. She has documented cases where opaque automated systems determine asylum claims, raising concerns about due process. Dr. Wong stressed that AI-driven tools replicate existing inequalities rather than reducing them, calling for greater scrutiny, especially in immigration and policing, where biased data can have serious consequences.

The panel agreed that addressing these issues requires a two-pronged approach: advocacy and regulatory action.

The role of consent in the digital age

Ashley raised an important concern around digital literacy, stating that many users don’t fully understand what they’re agreeing to when they consent to data collection. Dr. Wong expanded on this, comparing AI-driven data collection to lengthy terms-of-service contracts that users often accept without reading. She also highlighted the ethical dilemma of real-time transcription apps, where students consent to use the app, but professors and classmates being recorded may not.

Kiren emphasized how digital consent can be further complicated when data is shared and repurposed beyond its original intent, often without users’ knowledge.

The panel agreed that traditional consent models need rethinking in light of AI’s far-reaching capabilities.

The responsibility of AI developers

Ashley went on to discuss the importance of a privacy-by-design approach, ensuring that ethical considerations are embedded into AI development rather than treated as an afterthought.

Building on that point, Dr. Wong encouraged attendees to critically assess who is creating AI and what their motivations are. She cautioned that AI development is increasingly driven by a small, powerful group whose interests may not align with the broader public. She called for more transparency and accountability in how AI systems are designed and deployed.

Hope through digital literacy

Despite the challenges posed by AI, the panel remained optimistic about the power of digital literacy to promote more ethical practices. Kiren emphasized the importance of a shared understanding of AI and its impact, while Dr. Wong highlighted how public education can empower individuals to advocate for stronger policies and ethical practices.

The discussion concluded with a call for continued dialogue and collaboration. Dr. Wong directed those interested to a free chapter of her book, available through IEEE Spectrum, which explores what happens to data after death.